集群架构

1 | 192.168.10.186 ceph1 admin、mon、mgr、osd、rgw |

部署

1 | [root@10dot186 ~]# vim /etc/hosts |

1 | 在配置文件中增加: |

mon

1 | ceph-deploy install ceph1 ceph2 ceph3 |

mgr

1 | ceph-deploy mgr create ceph1 ceph2 ceph3 |

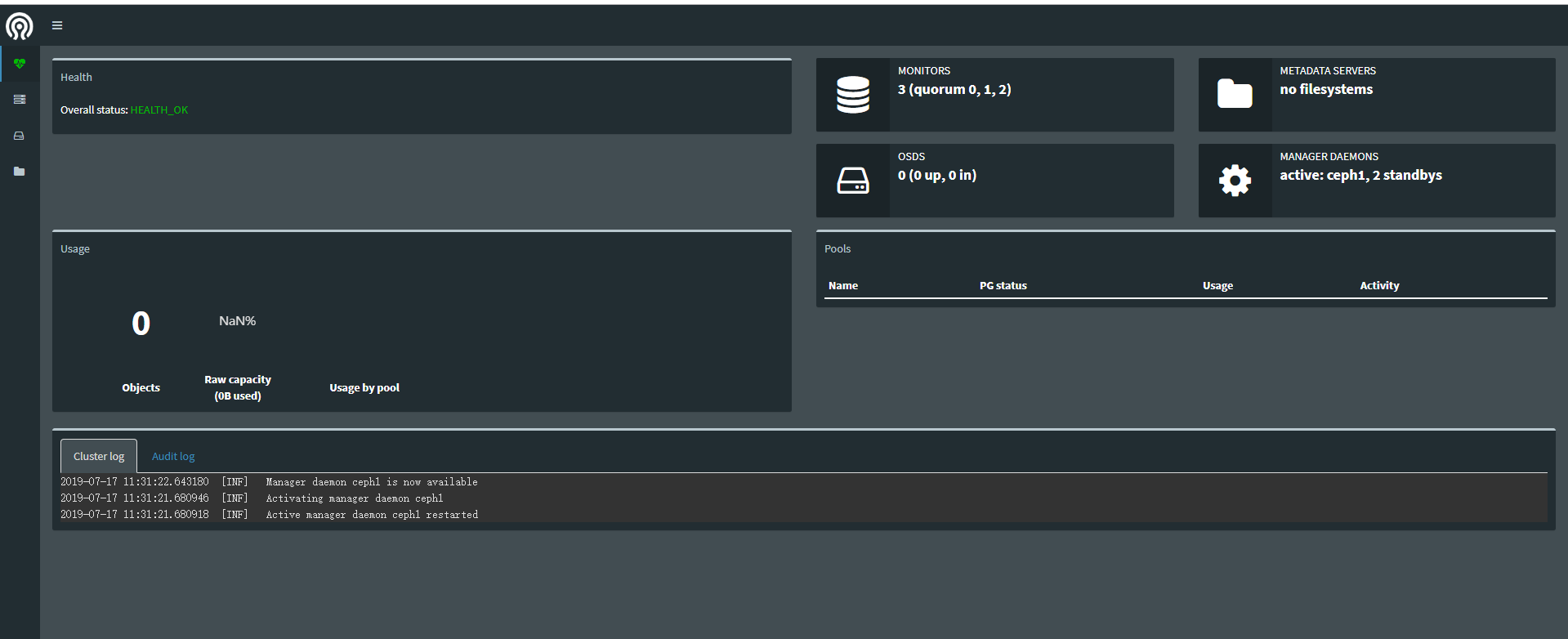

这时看下danshboard图:

osd

1 | 每台机器做逻辑卷 |

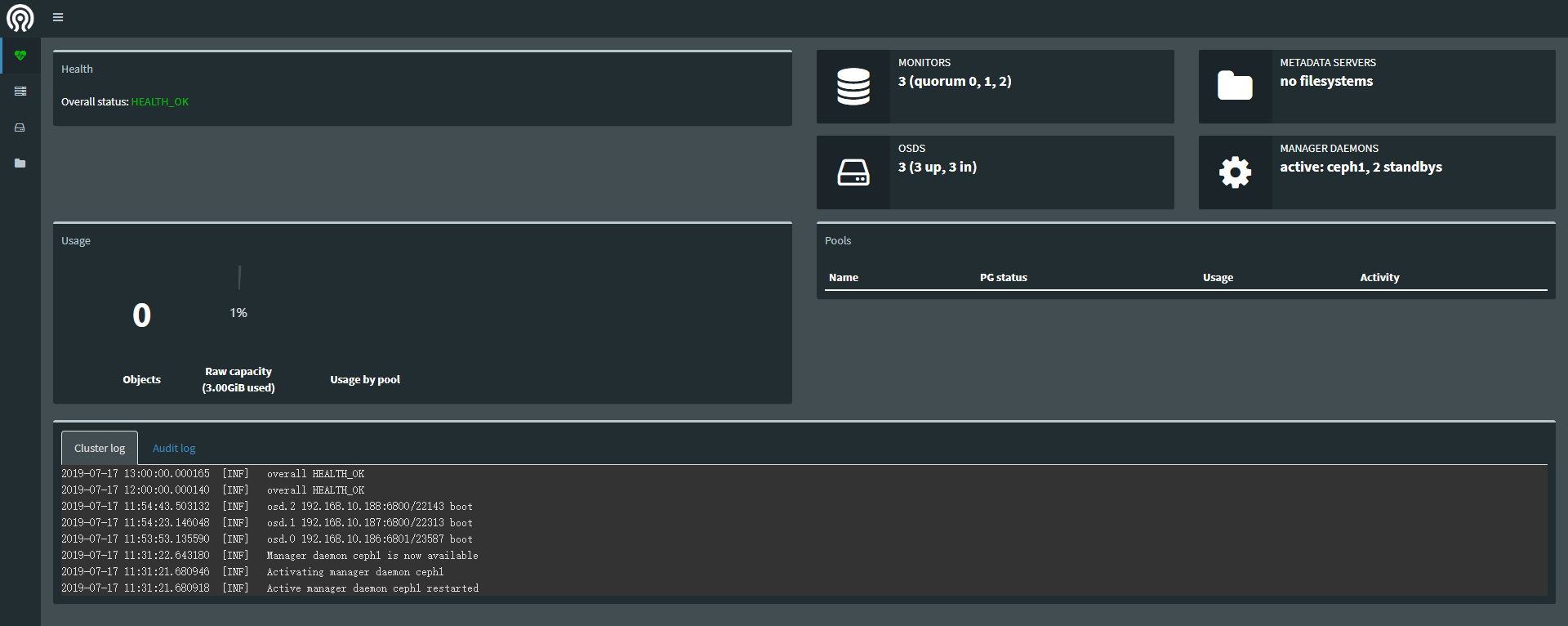

这时看下danshboard图:

rgw集群

1 | ceph-deploy install --rgw ceph1 ceph2 ceph3 |

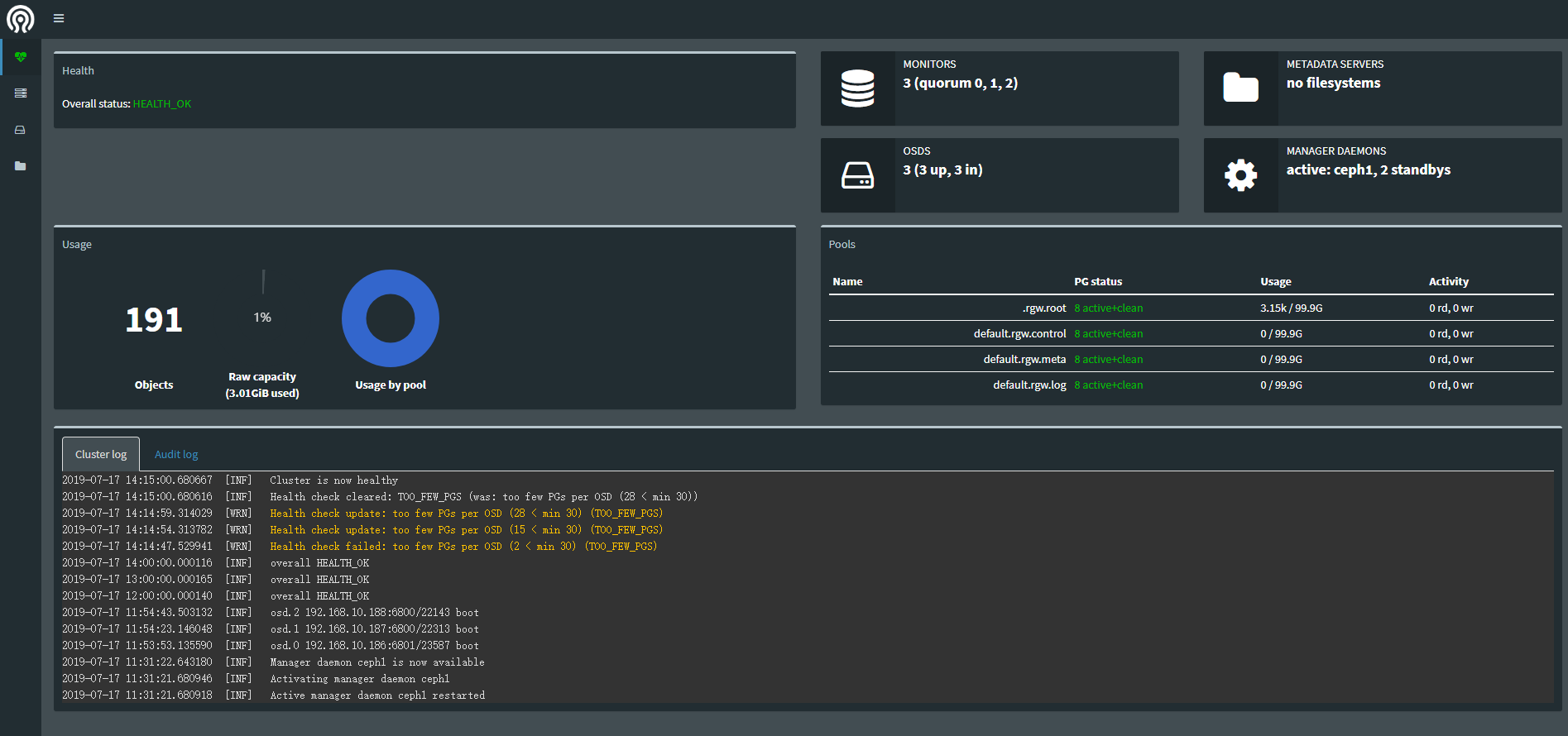

这时看下danshboard图:

NGINX代理

1 | 安装这里就不介绍了 |

s3和swift

1 | 具体安装这里不叙述了,可以看我上篇文章 |