k8s默认使用的本地存储,集群容灾性差,ceph作为开源的分布式存储系统,与openstack环境搭配使用,已经很多云计算公司运用于生产环境,可靠性得到验证。这里介绍一下在k8s环境下ceph如何使用.

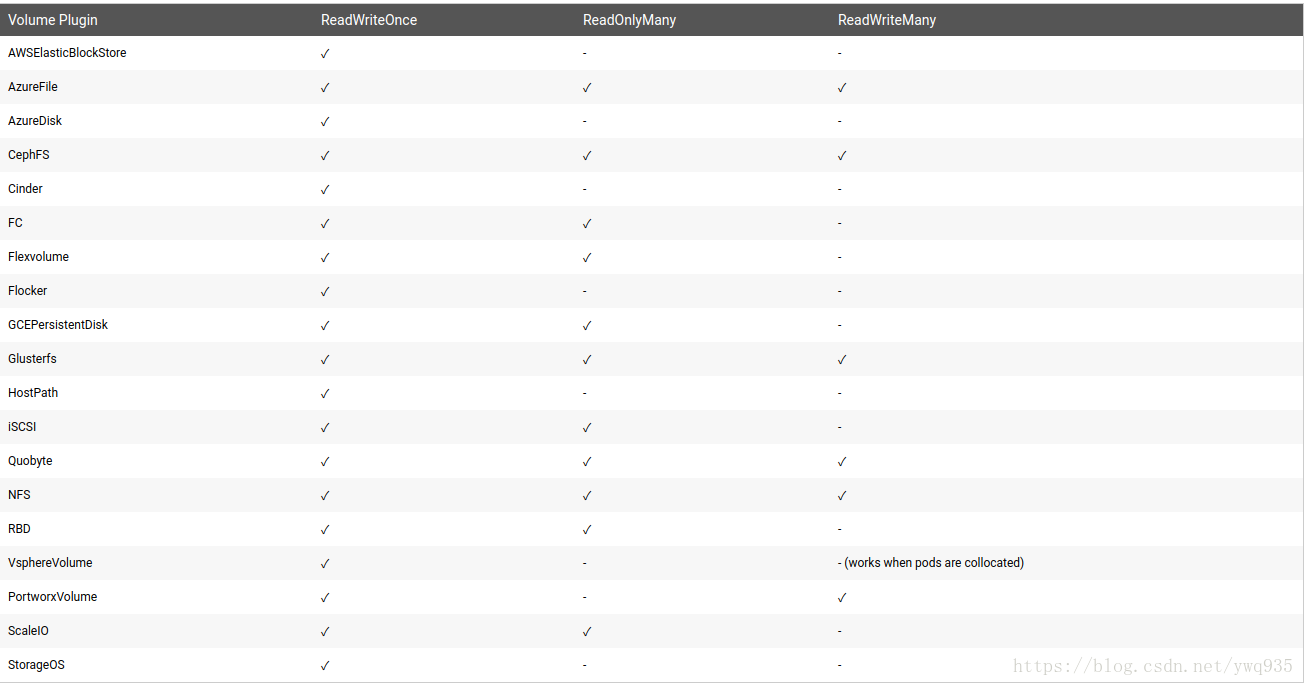

Kubernetes支持后两种存储接口,支持的接入模式如下图:

ceph端

新建pool

新建一个pool pool_1包含90个pg

1 | ceph osd pool create pool_1 90 |

RBD块设备

在ceph集群中新建1个rbd块设备,lun1

1 | rbd create pool_1/lun1 --size 10G |

ceph权限控制

1 | 使用ceph-deploy --overwrite-conf admin部署的keyring权限太大,可以自己创建一个keyring client.rdb给块设备客户端node用 |

k8s的node上操作

1 | [root@node1 ceph]# ceph -s --name client.rdb |

map设备

1 | # rbd map pool_1/lun1 --name client.rbd |

将块设备挂载在操作系统中进行格式化

1 | rbd map pool_1/lun1 --name client.rbd |

创建pv、pvc

1 | 对ceph.client.admin.keyring 的内容进行base64编码 |

1 | 根据上面的输出,创建secret ceph-client-rbd |

1 | 创建pv,注意: 这里是user:rbd 而不是user: client.rbd |

1 | 创建pvc |