前言

一般来说大家都用etcd备份恢复k8s集群,但是有时候我们可能不小心删掉了一个namespace,假设这个ns里面有上百个服务,瞬间没了,怎么办?

当然了,可以用CI/CD系统发布,但是时间会花费很久,这时候,vmvare的Velero出现了。

velero可以帮助我们:

- 灾备场景,提供备份恢复k8s集群的能力

- 迁移场景,提供拷贝集群资源到其他集群的能力(复制同步开发,测试,生产环境的集群配置,简化环境配置)

下面我就介绍一下如何使用 Velero 完成备份和迁移。

Velero 地址:https://github.com/vmware-tanzu/velero

ACK 插件地址:https://github.com/AliyunContainerService/velero-plugin

下载 Velero 客户端

Velero 由客户端和服务端组成,服务器部署在目标 k8s 集群上,而客户端则是运行在本地的命令行工具。

- 前往 Velero 的 Release 页面 下载客户端,直接在 GitHub 上下载即可

- 解压 release 包

- 将 release 包中的二进制文件

velero移动到$PATH中的某个目录下 - 执行

velero -h测试

部署velero-plugin插件

拉取代码

1 | git clone https://github.com/AliyunContainerService/velero-plugin |

配置修改

1 | #修改 `install/credentials-velero` 文件,将新建用户中获得的 `AccessKeyID` 和 `AccessKeySecret` 填入,这里的 OSS EndPoint 为之前 OSS 的访问域名 |

1 | #修改 `install/01-velero.yaml`,将 OSS 配置填入: |

k8s 部署 Velero 服务

1 | # 新建 namespace |

备份测试

这里,我们将使用velero备份一个集群内相关的resource,并在当该集群出现一些故障或误操作的时候,能够快速恢复集群resource, 首先我们用下面的yaml来部署:

1 | --- |

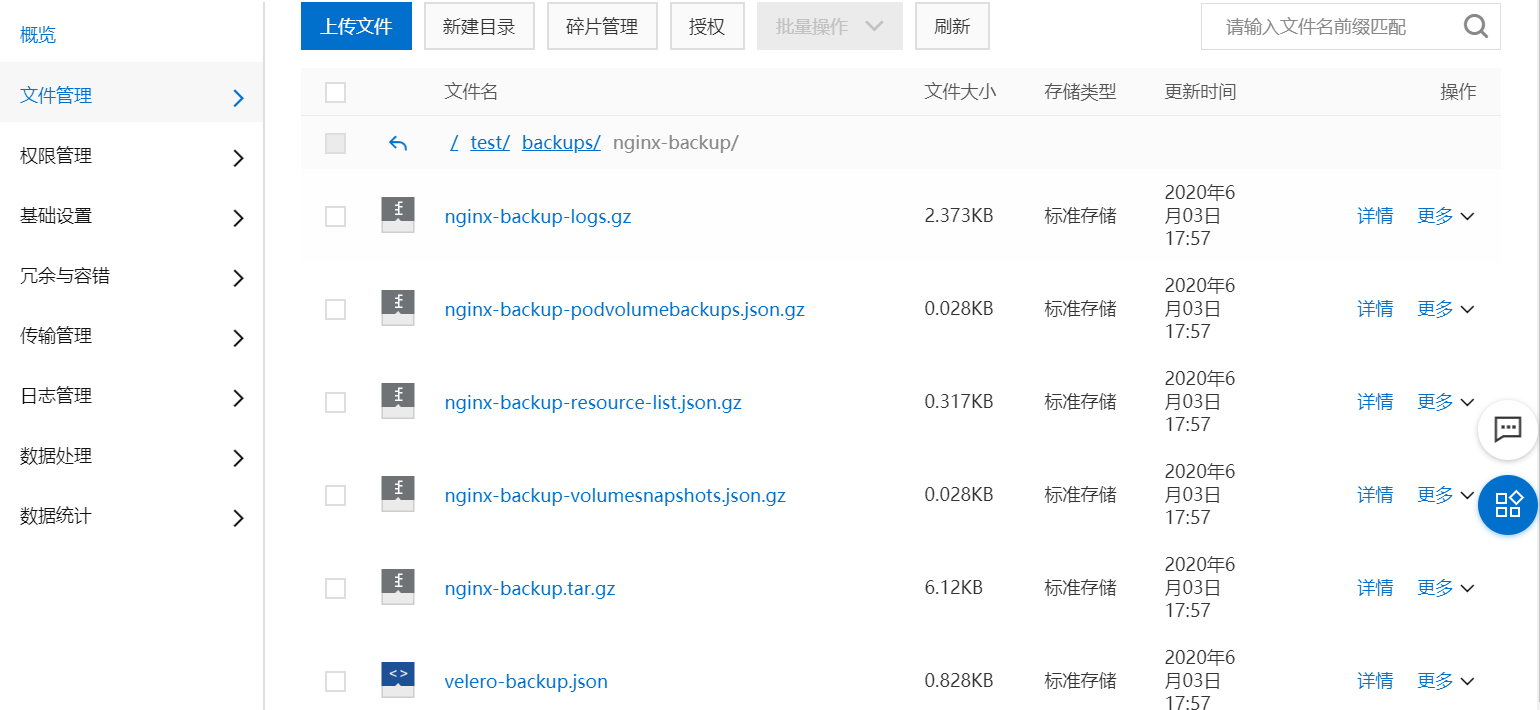

我们可以全量备份,也可以只备份需要备份的一个namespace,本处只备份一个namespace:nginx-example

1 | [rsync@velero-plugin]$ kubectl get pods -n nginx-example |

删除ns

1 | [rsync@velero-v1.4.0-linux-amd64]$ kubectl delete namespaces nginx-example |

恢复

1 | [rsync@velero-v1.4.0-linux-amd64]$ ./velero restore create --from-backup nginx-backup --wait |

另外迁移和备份恢复也是一样的,下面看一个特殊的,再部署一个项目,之后恢复会不会删掉新部署的项目。

1 | 新建了一个tomcat容器 |

restore 一下

1 | [rsync@velero-v1.4.0-linux-amd64]$ ./velero restore create --from-backup nginx-backup |

删除nginx的deployment,在restore

1 | [rsync@velero-v1.4.0-linux-amd64]$ kubectl delete deployment nginx-deployment -n nginx-example |

结论:velero恢复不是直接覆盖,而是会恢复当前集群中不存在的resource,已有的resource不会回滚到之前的版本,如需要回滚,需在restore之前提前删除现有的resource。

高级用法

可以设置一个周期性定时备份

1 | # 每日1点进行备份 |

1 | 定时备份的名称为:`<SCHEDULE NAME>-<TIMESTAMP>`,恢复命令为:`velero restore create --from-backup <SCHEDULE NAME>-<TIMESTAMP>`。 |

如需备份恢复持久卷,备份如下:

1 | velero backup create nginx-backup-volume --snapshot-volumes --include-namespaces nginx-example |

该备份会在集群所在region给云盘创建快照(当前还不支持NAS和OSS存储),快照恢复云盘只能在同region完成。

恢复命令如下:

1 | velero restore create --from-backup nginx-backup-volume --restore-volumes |

删除备份

- 方法一,通过命令直接删除

1 | velero delete backups default-backup |

- 方法二,设置备份自动过期,在创建备份时,加上TTL参数

1 | velero backup create <BACKUP-NAME> --ttl <DURATION> |

还可为资源添加指定标签,添加标签的资源在备份的时候被排除。

1 | # 添加标签 |

参考链接

—本文结束感谢您的阅读。微信扫描二维码,关注我的公众号—